Specifically, this resolves YES if:

(1) A new benchmark is announced before the end of 2025; and

(2) The best AI result published within three months after the announcement is less than half of the human-level target. (For example, if human-level performance is claimed to be 80%, an AI will need to reach at least 40%.)

If multiple new benchmarks are created in 2025, this will resolve YES if condition 2 is true for any of them.

Update 2025-09-01 (PST) (AI summary of creator comment): Resolution Criteria Update:

CPU or compute cost caps will be ignored when evaluating the AI performance.

Update 2025-03-24 (PST) (AI summary of creator comment): Human-Level Target Update:

The human performance target is defined as 60% based on TechCrunch's report.

Consequently, condition (2) will be evaluated as the best AI result needing to be less than half of 60% (i.e., below 30%).

Update 2025-03-24 (PST) (AI summary of creator comment): Human Performance Target and AI Score Requirement

The market expects an average human performance of 60%, meaning the AI system must score below 30% within three months of a new benchmark announcement.

Compute Resources Ignored

Any amount of compute resources is acceptable; CPU or compute cost caps are ignored in the evaluation.

Multiple Benchmark Clause

If multiple new benchmarks are released during 2025, the market cannot resolve as NO until the end of the year, acknowledging that later benchmarks could provide additional opportunities for meeting condition (2).

@traders TechCrunch reports average human performance of 60%, so this will resolve YES if no AI system using any amount of compute resources reaches a score of 30% in the next three months.

https://techcrunch.com/2025/03/24/a-new-challenging-agi-test-stumps-most-ai-models/

But note that I also added a clause for multiple new benchmarks being released this year. So this can't resolve NO until the end of the year, in case another benchmark is created that meets the criteria.

@traders Sorry I forgot to resolve this sooner. According to the leaderboard, the best solution for ARC AGI 2 is still just under 30%, so this resolves YES.

@Bayesian if someone makes a market on 50% by EOY I'd bet against you there! (not at 80%, but at somewhere around even odds)

@traders TechCrunch reports average human performance of 60%, so this will resolve YES if no AI system using any amount of compute resources reaches a score of 30% in the next three months.

https://techcrunch.com/2025/03/24/a-new-challenging-agi-test-stumps-most-ai-models/

But note that I also added a clause for multiple new benchmarks being released this year. So this can't resolve NO until the end of the year, in case another benchmark is created that meets the criteria.

@TimothyJohnson5c16 Can this resolve YES now? I'm not aware of any reported score greater than 30%

@mathvc Thanks, the clock starts today on the three months to see what the best AI result is.

I'm not sure how to define the "human performance" target though. I understand every question was solved successfully by at least two people, but I'm not sure what the average correct percentage is.

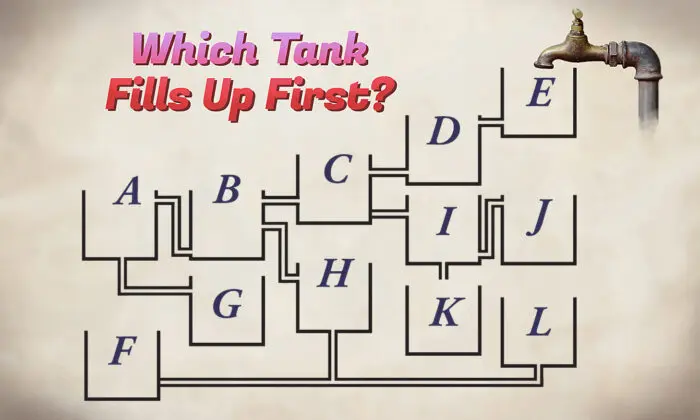

@mathvc o3 mostly succeeded at visual reasoning for the original ARC-AGI benchmark though. I'm curious how much harder they can make it while still keeping it easy for humans to solve.

@TimothyJohnson5c16 ARC is special kind of visual reasoning (discrete 2D grid). There are many visual tasks reasoning beyond that

@Nick6d8e Hmm, good question. I'm interested in comparing with o3's performance on ARC-AGI-1, and I understand they spent up to $1,000 per question, so I think I'll ignore the CPU cap.