Data is currently at

https://data.giss.nasa.gov/gistemp/tabledata_v4/GLB.Ts+dSST.csv

or

https://data.giss.nasa.gov/gistemp/tabledata_v4/GLB.Ts+dSST.txt

(or such updated location for this Gistemp v4 LOTI data)

January 2024 might show as 124 in hundredths of a degree C, this is +1.24C above the 1951-1980 base period. If it shows as 1.22 then it is in degrees i.e. 1.22C. Same logic/interpretation as this will be applied.

If the version or base period changes then I will consult with traders over what is best way for any such change to have least effect on betting positions or consider N/A if it is unclear what the sensible least effect resolution should be.

Numbers expected to be displayed to hundredth of a degree. The extra digit used here is to ensure understanding that +1.20C resolves to an exceed 1.195C option.

Resolves per first update seen by me or posted as long, as there is no reason to think data shown is in error. If there is reason to think there may be an error then resolution will be delayed at least 24 hours. Minor later update should not cause a need to re-resolve.

@gonnarekt The preliminary point for the last day of ERA5 keeps July at 16.678 C.

In my ERA5->LOTI model the bias between the datasets is corrected with an adjustment of -0.04 C to 16.637 C to convert to LOTI for July (corresponding to a LOTI unadjusted of 0.973 C).

The LOTI is then adjusted by own past prediction errors for a point prediction of 0.984 C.

@Weatherman 🤷 There is the GHCN daily but this is quite a bit different from the GCHN monthly dataset, although I haven't actually calculated how much. Not much motivation for me when there is no corresponding daily ERSST data to use (are you going to correlate on only the LAND data, or use some other dataset as a proxy for ERSST?)

Beyond that there is only 2 days of ERA5 data left, whatever difference of opinion more likely has to remain in the ERA5->LOTI model used:

These are the residuals that I've recorded for my own past LOTI prediction errors once all of ERA5 data is in (most of it is within the range of what might be determined by what run of ghcnm they randomly use):

At some point I started recording an extra-decimal point once I started outputting my own gistemp data that doesn't round the data since the numbers were too small:

own_data_error_residuals = np.array([-0.02, 0.01, 0.03, 0.01, 0.02, 0.03, 0.0, -0.01, 1.360 - 1.339, 1.225 - 1.173, 1.073 - 1.069, 1.032 - 1.045])

The bias is only 0.01 C....

@parhizj ERA5 data still could be off quite significantly, especially in 2024 with strong ENSO effect (El nino). The average of the daily GHCN's should give an idea how the monthly GHCN ends up though. I once looked into this data but it is a bit difficult how to process it and deal with data issues. I find this ncep reanalysis data interesting to look in as well http://www.karstenhaustein.com/climate

@Weatherman ERA5 is a reanalysis so I don’t know whether being “off” is the right idea; maybe you mean with just different biases, uncertainty than gistemp (and its input datasets)? (This should be accounted for in the ERA5->LOTI model though). You could use either dataset for ground truth.

@gonnarekt for me the variance noticably went up today from yesterday's run due to model variance (edit2: causing the std. dev to rise from yesterday by ~0.007 C).

These are the LOTI temps (superensemble debiased, without adjustment of own past loti prediction errors) that I've been getting since I've started using the super ensemble method I describe below (ended up back where I started today).

So far the little on-going validation has shown a slight bias to be being a bit cold (MAE ERA5-predicted is +0.02 C), while the unbiased version (MAE ERA5-predited is +0.01C), but this is still fairly small as it doesn't result in that different LOTI temps (difference of 0.002C in the LOTI temp being data for the latter half of the month).

When I use my own past errors in these markets as a further adjustment the LOTI temps go up by ~ 0.011 C.:

LOTI predictions for July:

# 07/14 - 07/24 # 0.966 # 0.959 # 0.954 # 0.950 # 0.948 # 0.948 # 0.954 # 0.956 # 0.956 # 0.957 # 0.966

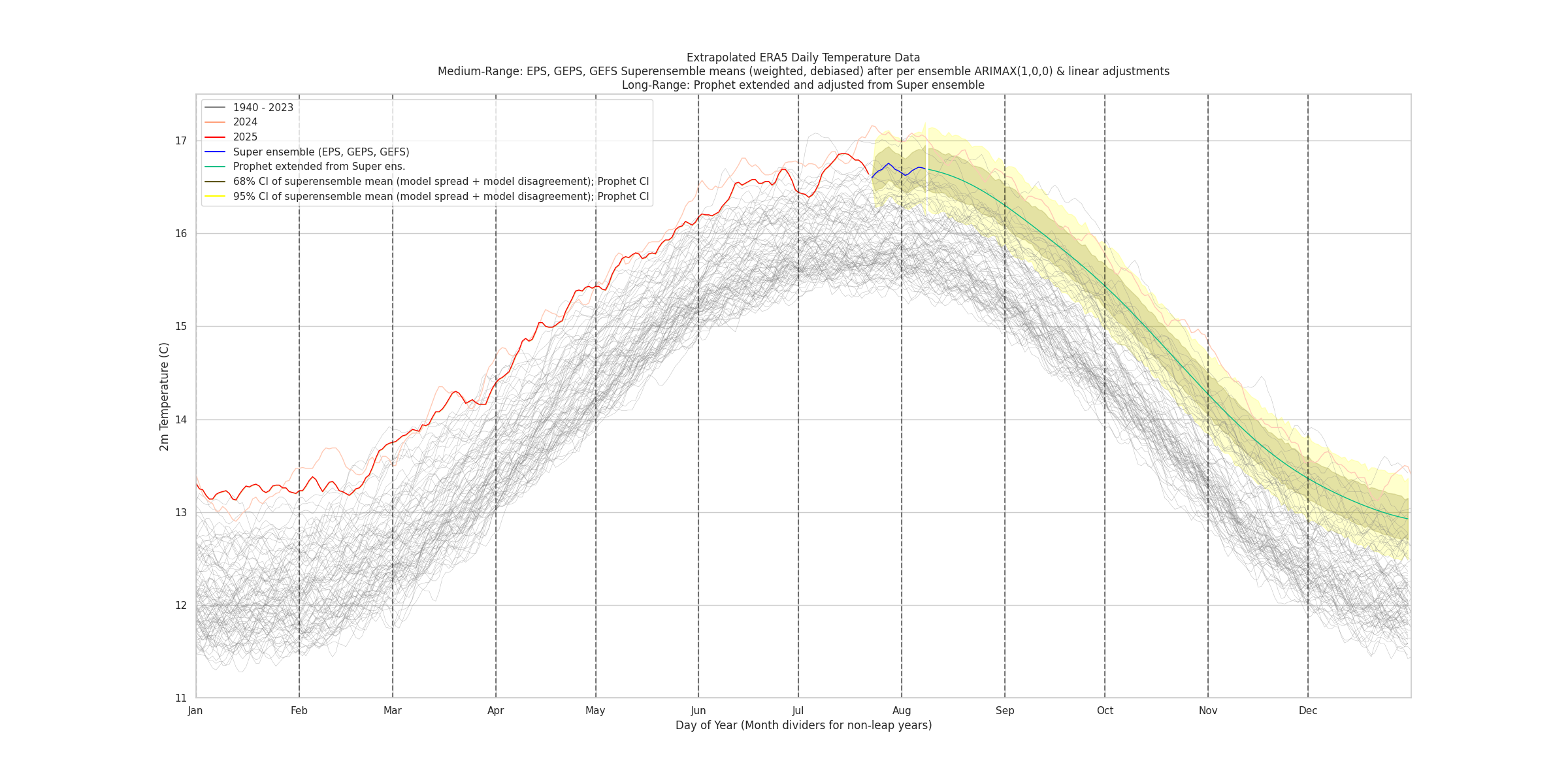

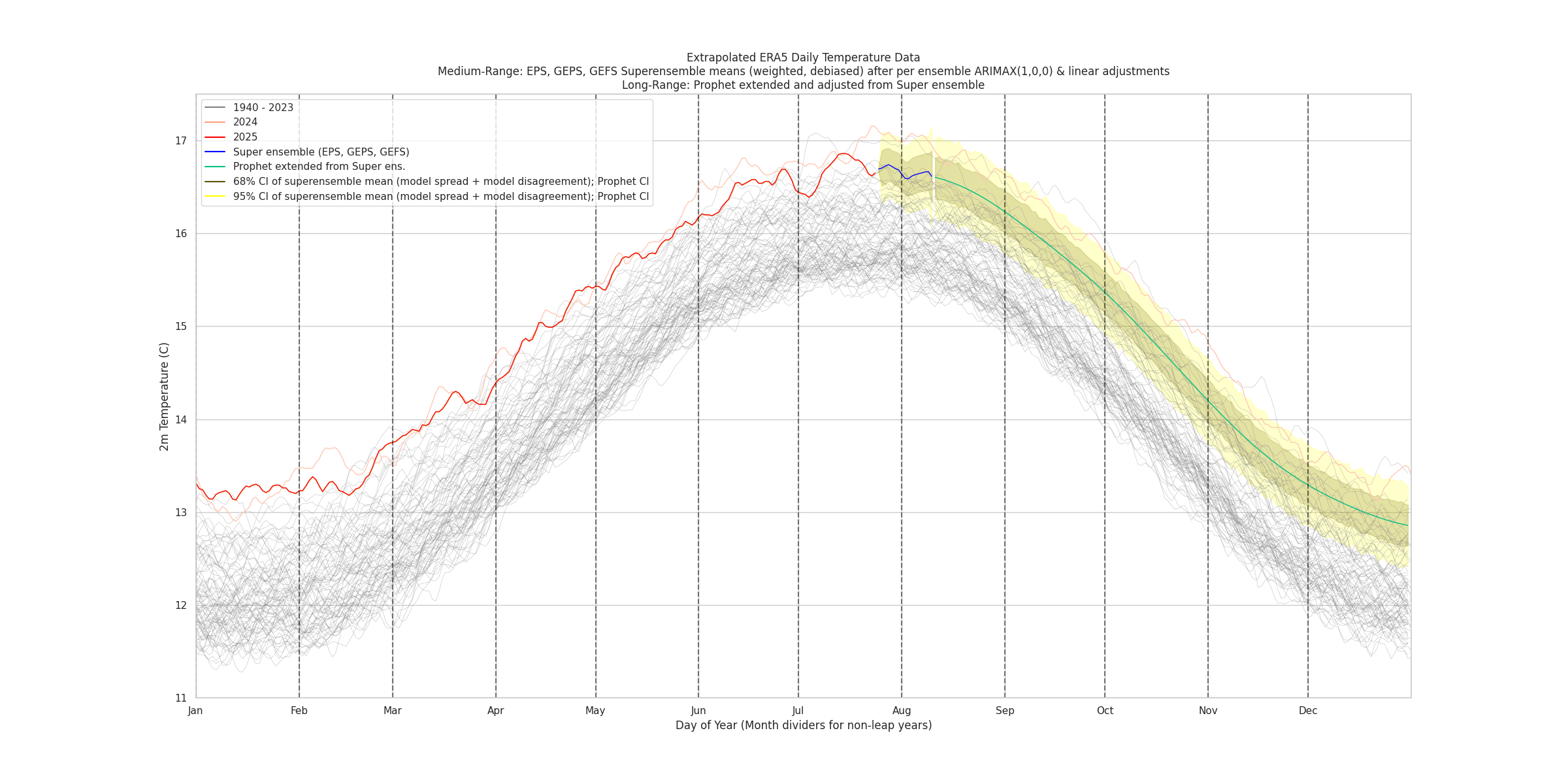

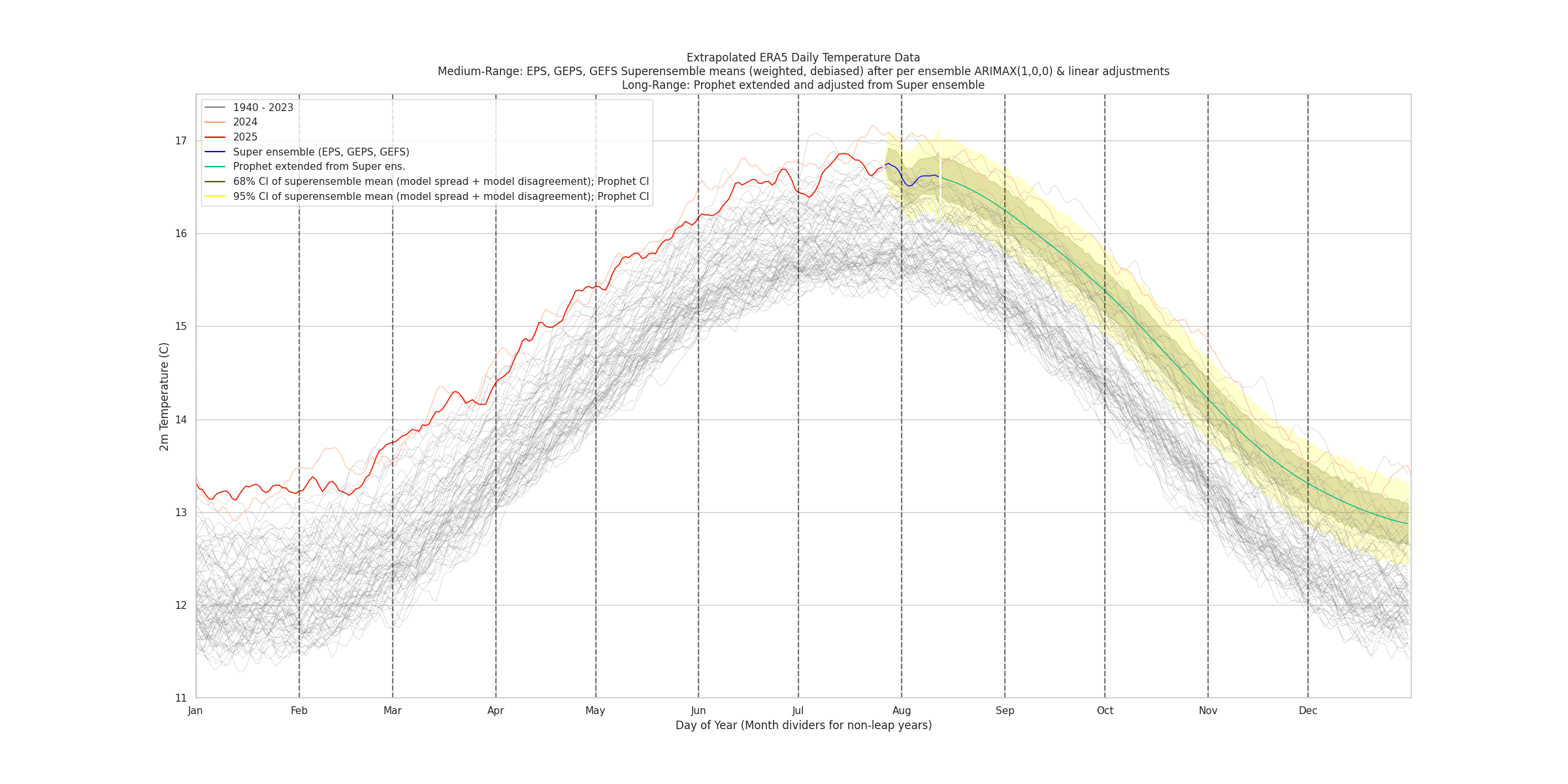

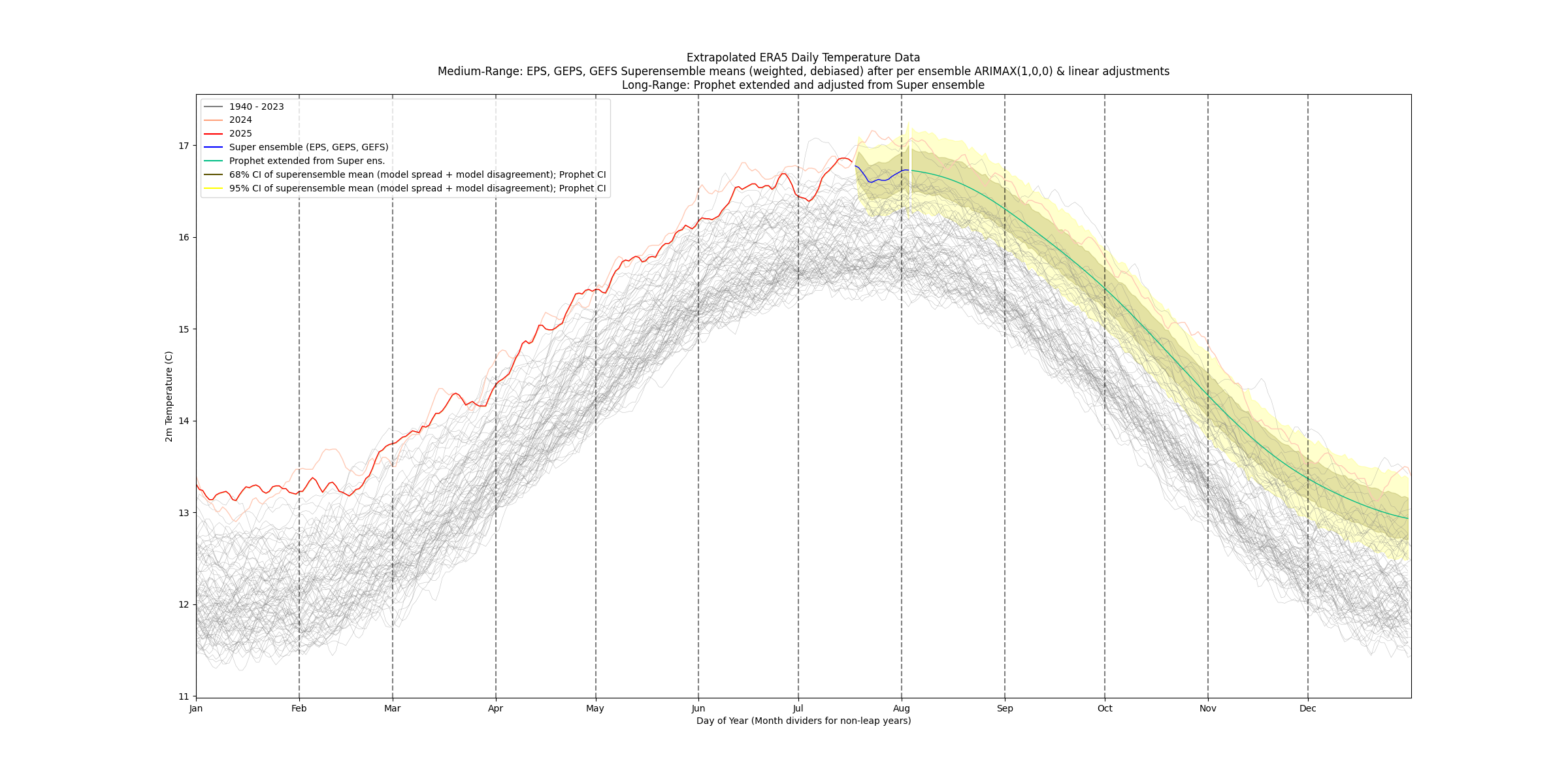

chart from (mostly 00Z) ensembles today (predicting an ERA5 July temp of 16.591 C):

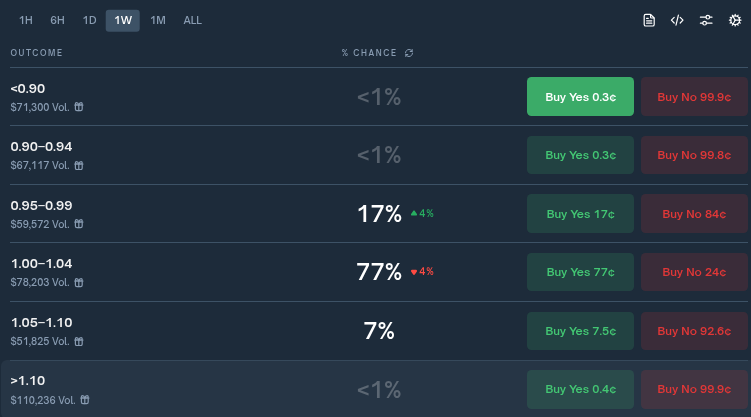

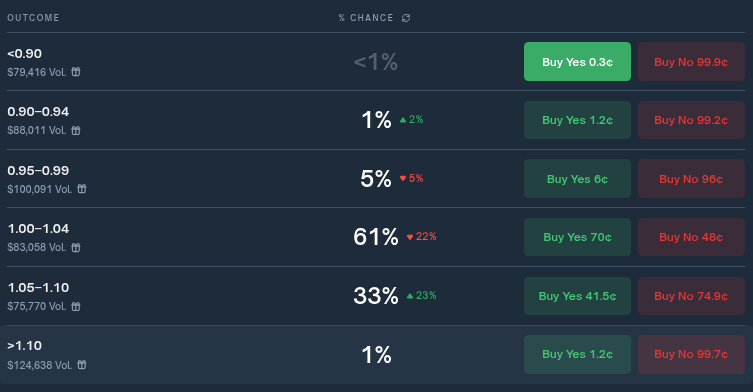

polymarket implies ~1.02C:

the superensembles from my model still imply ~ 0.968 C (an ERA5 temp of 16.594 C from the superensemble that isn't debiased; its bias is still a tiny bit on the cool side on average ~0.01C).

The simple ARIMA statistical model I use for reference (with doy harmonics) still implies much higher at 1.06 C (an ERA5 temp of 16.669 C); this makes the dynamic models 0.1 C cooler than the statistical in ERA5 temps. I could interpret this as polymarket sitting roughly halfway between these but slightly favoring the statistical (assuming the use of the same ERA5->GISTEMP model).

Not much change in the forecast chart for the rest of this month (a small rise and decline at the end of the month). The forecast temps for next month (August) have been trending cooler in the last several runs for the start of the month.

Only a few days left in July, and on track to a LOTI of 0.972 C

(without adjusting for own past ERA5->LOTI prediction errors, which pushes it to 0.981 C)

chart of the super ensemble still shows a dip at the end of the month:

Polymarket still favoring the next bin (1.00-1.04) ..

Still putting up limit orders for anyone that wants to bet towards polymarket's chosen bin.

@parhizj i have still 0.99-1.01 values favourable, i can say that Polymarket doesn’t explain real probs cause of different strategies and players, i guess 2 bins now more clear. 0.95-1.05 is good prediction

Explain the strategy of betting up 1.05-1.10 to 33%! 😭

I got 0.983 C as my prediction from my run earlier today (0.972 C not adjusted by own past forecast errors).

The ERA5 temp is 16.596 C.

For reference, as I mentioned before I do use my own past errors to generate a sharper guess of the std. dev. for my ERA5->LOTI model using the unadjusted temps (from own errors) once the whole month's data is in, and then use the min/max from the 99.5% CI for the std dev to generate the lower, upper bound for the probabilities given the adjusted LOTI:

In other words I think anywhere in these two ranges (most likely in the middle) are fair estimates for what the bins should be at:

99.5% Confidence Interval for Standard Deviation: (0.0295, 0.0456)

Std. dev.: 0.0295

Bin Probability (%)

<0.945 9.9

0.945-0.995 55.8

0.995-1.045 32.5

1.045-1.095 1.8

1.095-1.145 0.0

1.145-1.195 0.0

1.195-1.245 0.0

>1.245 0.0

Std. dev.: 0.0456

Bin Probability (%)

<0.945 20.2

0.945-0.995 40.2

0.995-1.045 31.0

1.045-1.095 8.0

1.095-1.145 0.7

1.145-1.195 0.0

1.195-1.245 0.0

>1.245 0.0

Given that the upper CI for the probability 1.045-1.095 bin is 8%, it looks like 33% seems nuts.

@parhizj probs of 1.05-1.10 is about 15% i guess one guy just making hedge position to win anyway, there is no other reasons for me. I see 1.015 like middle forecast point now.

@gonnarekt it’s funny 12 days ago you gave me your era5 temp and we pretty much agreed on it, and it did dip for a short time, but it’s now pretty much the same as it was in my forecast 2 weeks ago. Changed only by about 0.006 C.

The big difference in betting for me earlier on is I’ve bet based on my estimate of the larger uncertainty present in the past and the dip in the forecast near the 18th.

@ChristopherRandles only a single day left of era5 data missing, so I can't speculate beyond what I've said 🤷

I did put up a decent sized limit order for bettors

@ChristopherRandles Did a sanity check to make sure I haven't lost my mind...

As a sanity check we can compare the deltas, showing the model I use is acting (approximately and appropriately) as a linear interpolation at this point between the two nearest ERA5 temps and their respective LOTI temp (other months might not be so neat):

Edit v2:

Date, ERA5, LOTI, ERA5-2025, LOTI-2025

July 2024, 16.908, 1.203, 0.230, 0.219

July 2025, 16.678, 0.984, ~0.00 , 0.000

July 2019: 16.626, 0.935, -0.052, -0.049

On the LOTI->ERA5 process:

For reference again, the LOTI->ERA5 model I use is a simple (per-month) linear model; i.e. after splitting datasets into each month over the 1940+ period, we use a linear model (a separate one for each month) to debias it.

I.e. we debias the ERA5 temps relative to the GISTEMP temps over time (1940+) by year to get a "corrected" ERA5 temp for each month (this is to account any time-varying relative bias), and then take the means for each dataset and offset it to convert to the anomaly temp: (the GISTEMP month's mean 1940-2024) + (ERA5 corrected month's mean for 2025) - 14 C. (The 14 C is the simple fixed offset to get from anomaly to absolute temp and vice versa).

The LOTI from this is then adjusted one more time from own past forecast errors (a simple mean bias adjustment). Unadjusted, for instance, for above is 0.973.

@gonnarekt with new data and little correction i get 0.955-0.995 range, i will check it one more time at the end of the next week

@gonnarekt switched to a new model to combine ensemble forecasts, optimizing on bias-variance on arimax adjusted forecasts of ensemble means (this forms a super ensemble, weighting the arimax mean forecasts from EPS, GEPS, GEFS, with weights as 0.57, 0.33, 0.10 respectively, and adjusting based on biases found in training; this matches roughly in line with own experience of ranking these models performance by forecasts error).

Right now the expected value overall is still 0.966 C.

I take the variance from the actual ensemble forecasts and weight them to come up with an estimate for the super ensemble's contribution to the overall variance (0.023) for the month. Right now its contribution is slightly larger than the estimate for the contribution of the variance for the ERA5->gistemp model using own prediction errors (0.021).

I don't have confidence that this total overall variance is actually too large, so I'm reducing my bet sizes for a while accordingly.

Edit: Here are the ensemble mean biases estimated from training: (model - era5)

EPS: -0.022

GEPS: +0.047

GEFS: + 0.041

EPS predicted temps are slightly cooler than ERA5, while GEPS and GEFS are quite a bit hotter than ERA5.

Unfortunately I lack a diverse set of forecasts for GEPS so its performance and bias is only based on the last few months (where as for EPS/GEFS I have samples from at least 1 year).

The trend has been downward (although slowing) for the last 5 days for the (unadjusted by own errors) LOTI values..

07/14 - 07/18:

0.966

0.959

0.954

0.950

0.948

After adjusting for own past errors, expectation (0.958 C) is still in the second bin, but still with a wide CI estimated for the std. dev.: 99.5% Confidence Interval for Standard Deviation: (0.1044, 0.1611), which puts most of the probability (~45%) still in the lowest bin.

Right now the variance breaks down into the components as such (at least now the super ensemble variance is lower than the ERA5 model variance):

Own error variance (ERA5 only) 0.0207

Super ensemble var from ensembles: 0.0157

@gonnarekt Confused about that shift upwards for you.

I ran the numbers again without the super ensemble bias adjustment and it didn't move much: the (unadjusted on own error) LOTI is 0.951 C, based on the arimax adjusted ensemble means as weighted as I mentioned in the comment above.

For reference what are you getting for your ERA5 month average if you output that in your model?

(super ens bias adjusted): Absolute ERA5: 16.573

(super ens bias unadjusted): Absolute ERA5: 16.576

@gonnarekt From that ERA5 temp you provided my linear ERA5->GISTEMP model indicates something like a GISTEMP of ~1.01 C for July, so it looks like its mostly disagreement about our forecasts for the ERA5 temp, not the actual ERA->GISTEMP fitting.

Polymarket right now is leaning towards your estimate of the LOTI.

Updated after today's 00Z ensembles, the temp is holding mostly steady at 16.573 C (with the super ensemble debiasing) corresponding to an (unadjusted by own errors) LOTI of 0.948 C (adjusted upwards to 0.959 C by own errors).

Super ens. without debiasing ERA5 is 16.575 C (so very close).

An updated chart with forecast (still showing a drop coming):

@gonnarekt the upwards adjustment on own errors I refer to is simply a small sample debiasing based on my own ERA5-> Gistemp predictions once all the ERA5 data has come in … in other words most of the recent predictions that I’ve made after the month has finished that I’ve made have been a bit under.

(I use my own errors to come up with a CI for the std. dev of this error, rather than relying on the model std. dev. from the historical data which has distributional shifts and larger variance historically — this variance along with the variance from the super ensemble (the variance plotted) is what I’m using to come up with probabilities).