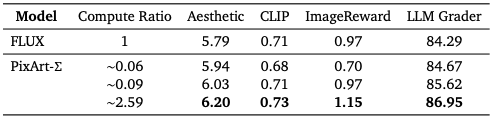

Resolves as YES if there is strong evidence that a significant inference-time scaling overhang for aesthetic quality is discovered in the context of applying inference-time scaling techniques to generative AI problems (e.g., creative writing, image and video generation, music synthesis). This discovery must take place and become evident before January 1st 2031 and must occur in at least two distinct modalities in order for this question to resolve as YES.

In this context, an inference-time scaling overhang means that:

Generative models exhibit significantly improved aesthetic outputs—above and beyond what was previously expected or observed from training-time scaling—when additional compute (e.g., memory, parallelization) is devoted to inference.

This improvement reflects a sustained scaling curve behavior over a period of more than 18 months, during which multiple labs or organizations apply and refine inference scaling techniques that continue to yield progressively better aesthetic quality in generative model outputs.

These improvements are recognized as extending beyond standard incremental gains, indicating that models can leverage disproportionate advantages when scaled at inference time, compared to their training-time baselines.